Metric Configuration

By standardizing

global benchmarksfor functional/performance/stability test metrics, 90% of testing scenarios can be evaluated uniformly while allowing interface-level flexibility. Once configured, settings automatically apply to all new test tasks, significantly improving testing efficiency.

⚠️ Key Notes:

- Current metric configurations serve as default global settings, effective for all projects.

- Interface-level configurations override global settings. Configuration path:

API → Target API → Metric Configuration

I. Functional Test Metrics Configuration

Defines baseline pass criteria for smoke tests and security tests

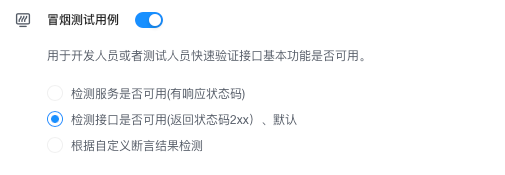

1. Smoke Test Configuration

Purpose: Verify basic API availability

Steps:

- Navigate to

Configuration Center → Metric Management → Functional Metricsand click "Edit" - Toggle on

Smoke Test Cases - Select detection strategies (default: "API Availability"):

- ☑️ Service Responsiveness: Non-5xx status codes

- ☑️ API Availability: 2xx status codes (recommended)

- ☑️ Custom Rules: Script-based evaluation

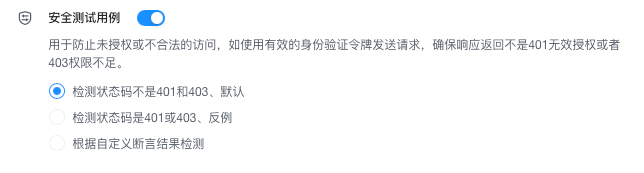

2. Security Test Configuration

Purpose: Prevent unauthorized access

Steps:

- On the same page → Toggle on

Security Test Cases - Select validation logic:

- ✅ Positive Validation: Exclude 401/403 statuses (default)

- ✅ Negative Validation: Require 401/403

- ✅ Custom Policy: Script-based authorization logic

- Click "Save"

II. Performance Test Metrics Configuration

Sets core thresholds for stress testing

1. Core Parameter Matrix

| Metric | Default | Business Impact | Optimization Suggestion |

|---|---|---|---|

| Concurrent Users | 500 | System capacity limit | Set at 120% of production traffic |

| 90% Response Time | ≤500ms | User experience | ≤200ms recommended for internet services |

| Error Rate | ≤0.01% | Business reliability | Set to 0% for payment systems |

| Ramp-up Step | 50 users | Test precision | 10% concurrency increase per step |

2. Configuration Steps

- Navigate to

Metrics → Performance Metricsand click "Edit"

- Modify key parameters

- Click "Save"

⚠️ Financial Systems Note:

- Error rate must be set to 0%

- Achieve via global or interface-level configuration

- Recommended ramp-up step: 10 (precision-first)

III. Stability Test Metrics Configuration

Verifies long-term system reliability standards

1. Core Parameter Matrix

| Metric | Default | Business Impact | Optimization Suggestion |

|---|---|---|---|

| Concurrent Users | 200 | Steady load | Set at 70% of production traffic |

| Test Duration | 30m | Stability window | 24 hours recommended |

| 90% Response Time | ≤500ms | User experience | ≤200ms recommended for internet services |

| Error Rate | ≤0.01% | Business reliability | Set to 0% for payment systems |

2. Configuration Steps

- Navigate to

Metric Management → Stability Metricsand click "Edit"

- Business Layer Configuration:

- Concurrent Users: 200

- Duration: 30 minutes

- Error Rate: ≤0.01%

- Resource Layer (Optional):

Resource Threshold Notes CPU ≤75% Triggers alerts when exceeded Memory ≤75% Critical for JVM applications Disk ≤75% Key metric for logging systems Network ≤75MB/s Core metric for video systems - Click "Save"

⚠️ Prerequisite for Resource Monitoring:

Complete Configuration → Nodes setup first

IV. Efficiency Metric Grading System

Quantifies team productivity with scoring standards

1. Five-Dimensional Evaluation Matrix

| Tier | Workload | Completion Rate≤ | Overdue Rate≤ | Pass Rate≥ | Savings Rate≥ |

|---|---|---|---|---|---|

| Poor | 100 | 35% | 35% | 30% | 0% |

| Weak | 100 | 50% | 25% | 45% | 0% |

| Medium | 100 | 65% | 15% | 60% | 10% |

| Good | 100 | 90% | 5% | 85% | 20% |

| Excellent | 100 | 100% | 2% | 90% | 30% |

2. Applications

Efficiency Dashboard: Auto-generates team heatmapsSprint Retrospectives: Identifies bottlenecksPerformance Reviews: Objectively quantifies output